In the rapidly evolving landscape of artificial intelligence, DeepSeek has emerged as a significant player, particularly with its advancements in multimodal models. These models integrate various forms of data, including text, images, and audio, to provide more comprehensive and contextually rich interactions. This article delves into the performance, open-source initiatives, commercial applications, and ethical considerations surrounding DeepSeek’s multimodal models, offering a holistic view of their impact and potential.

Performance Analysis of DeepSeek’s Multimodal Models

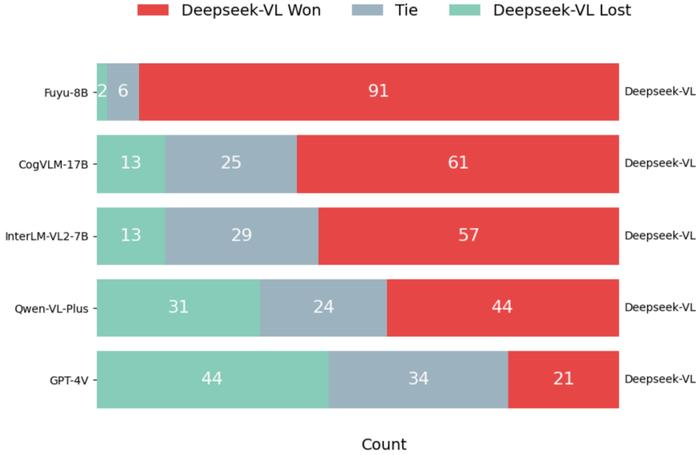

DeepSeek’s foray into multimodal models has been marked by significant achievements. The Align-DS-V model, developed in collaboration with Peking University and the Hong Kong University of Science and Technology, has demonstrated superior performance in visual understanding tasks. For instance, Align-DS-V outperformed GPT-4o in several benchmarks, such as llava-bench-coco, showcasing its advanced capabilities. Additionally, DeepSeek’s Janus-Pro model has garnered attention for its efficiency and performance, particularly in image generation tasks. Compared to models like DALL-E 3, Janus-Pro has shown notable improvements in certain areas, although there are still challenges to address.

Open Source Initiative and Community Impact

One of the distinguishing features of DeepSeek’s approach is its commitment to open-source. By making its models and code publicly available, DeepSeek aims to foster a collaborative environment where developers and researchers can contribute to and benefit from the advancements in AI. This strategy not only accelerates the dissemination of technology but also encourages innovation and customization. However, open-source models also face challenges such as ensuring security, managing contributions, and maintaining high standards of performance. Despite these hurdles, the open-source community around DeepSeek has grown rapidly, with significant contributions from global developers.

Commercialization and Industry Applications

The commercial potential of DeepSeek’s multimodal models is vast. In sectors like healthcare, models like “Qi Huang” have been developed to assist in medical diagnosis, leveraging the multimodal capabilities to analyze medical images and patient records. Similarly, in finance, DeepSeek’s “Tian Yuan” engine uses multimodal data to enhance risk management and fraud detection. These applications highlight the versatility of multimodal models in solving complex real-world problems. Additionally, DeepSeek’s partnerships with various companies and institutions have facilitated the deployment of these models in diverse industry settings, driving digital transformation and efficiency.

Ethical Considerations and Future Challenges

As with any advanced technology, the development and deployment of multimodal models raise ethical concerns. Issues such as bias in data, potential misuse of generated content, and the impact on employment are critical areas that need to be addressed. DeepSeek is actively engaged in developing frameworks and guidelines to ensure responsible AI practices. This includes efforts to mitigate biases, enhance transparency, and promote the ethical use of AI technologies. The future of multimodal models will depend on striking a balance between innovation and ethical considerations, ensuring that these powerful tools are used for the greater good.

Conclusion

DeepSeek’s journey in the realm of multimodal models is marked by significant milestones and promising prospects. From outperforming competitors in various benchmarks to fostering an open-source community and driving industry applications, DeepSeek has positioned itself as a leader in the AI field. However, the road ahead is not without challenges, particularly in addressing ethical concerns and ensuring sustainable development. As we look to the future, the continued evolution of DeepSeek’s multimodal models will undoubtedly shape the trajectory of AI, offering new possibilities and solutions across multiple domains.

Frequently Asked Questions

- How does DeepSeek’s performance compare to other leading multimodal models?

- DeepSeek’s models, such as Align-DS-V and Janus-Pro, have shown competitive performance in various benchmarks. For example, Align-DS-V outperformed GPT-4o in visual understanding tasks, while Janus-Pro demonstrated notable efficiency in image generation. However, performance can vary depending on the specific task and benchmark.

- What are the benefits of DeepSeek’s open-source strategy?

- The open-source approach allows for greater accessibility and collaboration, enabling developers and researchers to contribute to and customize the models. This strategy accelerates innovation and fosters a community-driven development process.

- In which industries are DeepSeek’s multimodal models being applied?

- DeepSeek’s models are being used in healthcare for medical diagnosis, in finance for risk management, and in other sectors where multimodal data can provide valuable insights. These applications leverage the models’ ability to process and integrate different types of data to solve complex problems.

- What are the main ethical concerns related to DeepSeek’s multimodal models?

- The primary ethical concerns include potential biases in the data used to train the models, which can lead to unfair or inaccurate outcomes. Additionally, there are concerns about the misuse of generated content, such as the creation of misleading or harmful images or text. Ensuring transparency and accountability in how the models are used is also crucial to mitigate these risks.

- How does DeepSeek address the challenges of open-source models?

- DeepSeek implements robust mechanisms to manage contributions and ensure the security of its open-source models. This includes regular audits, community guidelines, and efforts to maintain high standards of performance and reliability. By fostering a collaborative yet controlled environment, DeepSeek aims to maximize the benefits of open-source while minimizing potential risks.

- What is the future outlook for DeepSeek’s multimodal models?

- The future of DeepSeek’s multimodal models is promising, with ongoing research and development focused on improving performance, expanding capabilities, and addressing ethical concerns. As these models become more sophisticated and widely adopted, they are expected to drive further advancements in AI and contribute to solving complex real-world challenges across various industries.