In the days leading up to the Spring Festival of 2025, as red lanterns were hung in the streets and households were busy preparing for the New Year, DeepSeek, a Chinese AI company, suddenly became a global sensation with the release of its new model, DeepSeek-R1. This model not only matched the performance of OpenAI’s o1 formal version but also achieved extremely low training costs and was fully open-sourced. This move has caused a stir in the global AI community, and many are wondering why DeepSeek-R1 was able to achieve such widespread attention.

Performance Advantages of DeepSeek-R1 DeepSeek-R1 has shown remarkable performance in various tasks. In the AIME 2024 test, it achieved a pass@1 score of 79.8%, slightly surpassing OpenAI-o1-1217. In the MATH-500 test, it scored an impressive 97.3%, on par with OpenAI-o1-1217 and significantly better than other models. In coding tasks, DeepSeek-R1 demonstrated expert-level performance, achieving a 2,029 Elo rating on Codeforces, surpassing 96.3% of human participants. These results show that DeepSeek-R1 is not only competitive with the best models in the industry but also has unique advantages in certain areas.

Open-Source Strategy and Community Engagement One of the key factors behind DeepSeek-R1’s popularity is its open-source strategy. Unlike traditional tech giants like OpenAI and Google, which keep their models closed, DeepSeek has made the architecture and training methods of R1 fully open to global developers. This has led to a wave of enthusiasm for reproducing DeepSeek, with institutions such as the University of California, Berkeley, the Hong Kong University of Science and Technology, and companies like HuggingFace successfully replicating the model. By sharing its technology openly, DeepSeek has attracted a large number of developers and researchers, creating a vibrant community that can further improve and innovate the model.

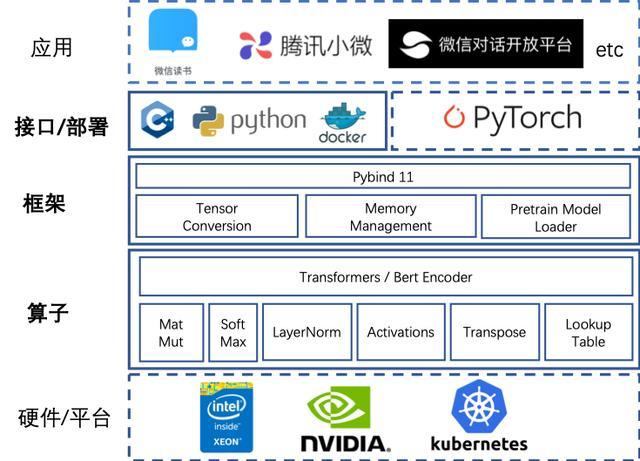

Cost Efficiency and Commercial Potential DeepSeek-R1’s cost efficiency is another major reason for its success. The model uses an unsupervised reinforcement learning training system, reducing the dependence on manually labeled data and allowing the algorithm to activate its reasoning potential through self-play. This approach has significantly lowered the training costs. For example, DeepSeek-V3 was trained for less than $6 million, which is only about one-tenth of the cost of similar models in the United States. In addition, DeepSeek’s API service offers highly competitive pricing, with input tokens costing only $1 to $4 per million and output tokens costing $16 per million. This high cost-performance ratio not only reduces the trial-and-error costs for developers but also accelerates the iterative “flywheel effect” of the model.

Ethical Considerations and Future Challenges While DeepSeek-R1 has brought many positive changes, it also faces some ethical challenges. As AI technology becomes more powerful and accessible, issues such as data privacy, algorithmic bias, and the potential misuse of AI need to be carefully considered. DeepSeek must ensure that its models are used responsibly and ethically, and it needs to work with the global community to establish appropriate guidelines and regulations.

Conclusion The success of DeepSeek-R1 during the Spring Festival is a testament to the power of innovation and openness. By combining excellent performance, an open-source strategy, and cost efficiency, DeepSeek has not only challenged the traditional dominance of AI giants but also opened up new possibilities for the future development of AI technology. As DeepSeek continues to grow and evolve, it will undoubtedly play an important role in shaping the future of AI.

Frequently Asked Questions

- What are the main performance advantages of DeepSeek-R1 compared to other models?

- DeepSeek-R1 has shown superior performance in several key areas, including mathematical reasoning, coding tasks, and natural language inference. It achieved a pass@1 score of 79.8% in the AIME 2024 test and a 97.3% score in the MATH-500 test. Additionally, it demonstrated expert-level coding ability, surpassing 96.3% of human participants on Codeforces.

- Why did DeepSeek choose an open-source strategy?

- DeepSeek’s open-source strategy is aimed at promoting innovation and community engagement. By making its model architecture and training methods open to the public, DeepSeek allows developers and researchers worldwide to reproduce, improve, and customize the model. This approach not only accelerates the development of AI technology but also creates a more inclusive and collaborative ecosystem.

- How does DeepSeek-R1 achieve such low training costs?

- DeepSeek-R1 uses an unsupervised reinforcement learning training system, which reduces the need for manually labeled data. This method allows the model to learn and improve through self-play, significantly lowering training costs. For example, DeepSeek-V3 was trained for less than $6 million, which is only about one-tenth of the cost of similar models in the United States.

- What are the potential ethical issues associated with DeepSeek-R1?

- As with any powerful AI technology, DeepSeek-R1 raises concerns about data privacy, algorithmic bias, and the potential misuse of AI. It is important for DeepSeek to ensure that its models are used responsibly and ethically, and to work with the global community to establish appropriate guidelines and regulations.

- How does DeepSeek-R1’s API pricing compare to other AI services?

- DeepSeek-R1’s API service is highly cost-effective, with input tokens costing only $1 to $4 per million and output tokens costing $16 per million. This pricing strategy is significantly more affordable than those of other major AI services, making it more accessible to a wider range of developers and businesses.

- What are the future plans for DeepSeek-R1?

- DeepSeek plans to continue improving the model’s performance and expanding its capabilities. Future iterations may focus on broader language support, faster engineering, and the development of specialized task datasets. Additionally, DeepSeek will likely continue to engage with the global AI community to drive further innovation and adoption of its technology.